Predictive Analytics Path to Mainstream Adoption

Hold on to your hats data scientists, you're in for another wild ride. A few months ago, our beloved field of predictive analytics was taken down a peg by the 2017 Hype Cycle for Analytics and Business Intelligence. In the latest report, predictive analytics moved from the “Peak of Inflated Expectations” to the “Trough of Disillusionment”. Don't despair, this is a good thing! The transition means that the silver bullet seekers are likely moving on to the next craze and the technology is moving one step closer to long term productive use. Gartner estimates approximately 2-5 years to mainstream adoption.

tracks new technology adoption from hype to productive use and all of the ups and downs in between.

Outlined below from Wikipedia, the phases of hype cycle include:

Technology Trigger - A potential technology breakthrough and kicks things off. Early proof-of-concept stories and media interest trigger significant publicity. Often no usable products exist and commercial viability is unproven.

Peak of Inflated Expectations - Early publicity produces a number of success stories—often accompanied by scores of failures. Some companies take action; most don't.

Trough of Disillusionment - Interest wanes as experiments and implementations fail to deliver. Producers of the technology shake out or fail. Investment continues only if the surviving providers improve their products to the satisfaction of early adopters.

Slope of Enlightenment - More instances of how the technology can benefit the enterprise start to crystallize and become more widely understood. Second- and third-generation products appear from technology providers. More enterprises fund pilots; conservative companies remain cautious.

Plateau of Productivity - Mainstream adoption starts to take off. Criteria for assessing provider viability are more clearly defined. The technology's broad market applicability and relevance are clearly paying off.

In the past few years, predictive analytics has been steadily moving along the curve to popularization. As someone in the field, I’ve been both excited and nervous about the changes. I’m thrilled to have more fellow interested colleagues and business support. However, I get nervous when folks jump on the trend and try to apply predictive analytics blindly as a way to automate the solution of any problem with data.

What is Predictive Analytics Anyway?

Simply put; predictive analytics is the process of identifying patterns in a data set that you have to estimate the values for data that you do not have.

The business problem frequently involves using past data to predict future data. For example, a company may use last years customer data to build a model which will predict which customers have a high potential to leave. Customer attribute data such as demographics, spend and engagement are analyzed using statistical techniques to create a predictive model. After the predictive model is created, it is then capable of taking in the same type of customer information (demographics, spend and engagement) for a new data set and estimating for each customer in this new data set, their probability of leaving. With this type of information, a company can flag and reach out to potential defective customers before they leave.

Steps to Predictive Analytics Modelling

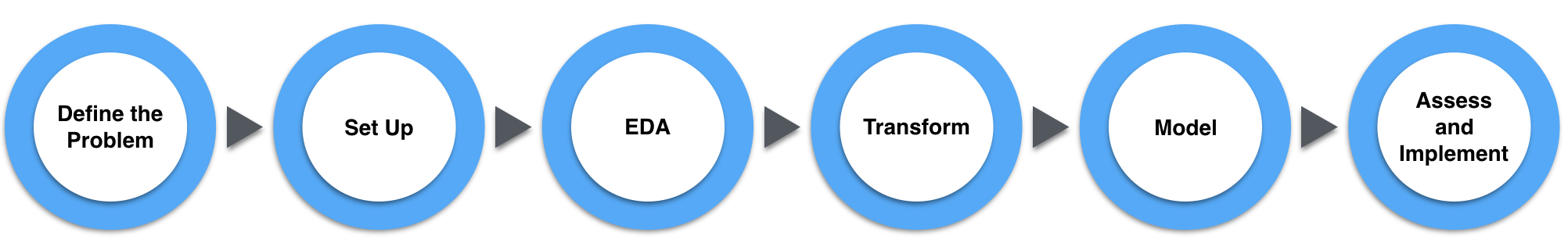

Tackling a predictive analytics problem requires more than simply throwing the data into a modelling software and running off with your winning lottery ticket. Although the actual creation of models can be that easy, for them to be effective it requires an in depth review of the problem and available data. Often so much is learned in the pre-modelling phases that the predictive modelling strategy changes shape over the course.

While predictive analytics projects can be quite detailed and complex, the high level tasks are straight forward. Each predictive modeller will have their own flavor of a process. Below, I've outlined what I believe to be the six major phases to creating an effective predictive model.

Define the Problem - Before you even get started, you need to understand the problem thoroughly. What are you being asked to do? What is the motivation? Understand as much about the problem landscape as possible including business models, product function, and more. Speak to subject matter experts. Speak to those who have attempted this problem before and learn from them. Soak in as much information as possible.

Set Up - Identify and access the data sources that you will analyze. Set up the tools you that you will use to bring in the data and perform the analysis.

Exploratory Data Analysis (EDA) - Now it's time to have fun! This is where you get to dig in and start unravelling the mystery of what is going on. It's called "Exploratory Data Analysis" because this is where you explore your data set. Evaluate all pieces of information you are given to understand their construction, population, quality and relationship with other pieces of information. For example; if "Average Monthly Spend" is a customer attribute in your data set, you will want to explore the following angles:

How many missing values are in this attribute?

What is the distribution? For example, you might notice that most customers spend $10 or less.

Are there any outlier values which will throw off the ability to detect patterns? For example, are most customers spending between $5-$50 dollars with a few customers spending $1000+ dollars?

What relationship does this attribute have with other attributes? For example, is the total spend highly correlated to zip code?

And more! You will find yourself being amazed at the stories that unfold during this phase.

Transform - Before you create a model, you need to perform some minor tweaks on your data set to maximize model performance. The EDA from the previous step will indicate what you need to do. For example, if there are a some pieces of information with missing values, you need to decide what to do with them. Do you want to replace the missing value with the overall average, replace with a calculated value or remove that customer from the set altogether? You may need to decide how you handle outliers. Are you going to cap the values at a certain hard value, or cap at a certain percentile? Will you separate the data with high and low outliers for their own special model? There are many transformations that can be done to help your model more accurately reflect the intended audience and give you higher performance.

Predictive Modelling - Time to create models! There are so many cool techniques out there to try, it's good to get creative. Depending on what you are trying to predict, one type of model might be more fitting than another. Logistic regression can be great for classification type problems such as predicting if an insurance customer will make a claim (Yes or No). Linear regression can be a great choice for numerical predictions such as predicting how much an insurance claim from a particular customer is likely to cost you ($0 to $X dollars). Also, you will want to play with which variables you will include as input for each type of model.

Assess and Implement - Now that you've created all of your candidate models, its time to pick one. Start by reviewing basic performance stats about the models. You'll want to look for accuracy in a way that is applicable to the model and the problem. For classification problems you might want to look at a confusion matrix which essentially says how many classifications you got right and wrong when using the model on the training data. For numerical predictions you will want to look at the mean squared error. Loosely put, this allows you to understand the relative average amount you are off on predictions. Beyond overall performance metrics, you will also want to consider the practicality of your model. A good way of doing this is to consider the bias variance trade off. A model that is biased is considered to have potentially oversimplified the solution and may not give the most accurate predictions. However, it is usually very intuitive and provides relatively stable performance with fluctuations in the data set. If your model is too complex, it can perform very well on the data set it trained on, but it might be too customized to the data it has seen before. This means that it might not handle variance in the data very well. Additionally, these models are often so complex that they are incredibly difficult if not impossible to interpret.

At the end of this step you will look at all you know about your models and choose the one you think is best. You will then use the model to predict new data.

I’m Doing a Series!

The above steps can be a lot to take in! If you're like me, you are probably looking for a concrete example that you can follow along and try for yourself. As such, I'm in the midst of shaping a tutorial for y'all using some of my favorite technologies (spoiler alert: it uses R, R Studio and Data Science Experience). The only hitch is that it's ends up being far too much material to cover in one blog. To remedy this, I decided to give the topic the respect it deserves and create a multi part tutorial. I'm going to break the tutorial down into 4 parts! FOUR! I'm aiming to release them at a cadence of one every week or two. They will include a write up of the steps and code that you can execute in your own environment.

Tutorial 1: Define the Problem and Set Up

Tutorial 2: Exploratory Data Analysis (EDA)

Tutorial 4: Model, Assess and Implement

I hope this blog has been beneficial to you. Please feel free to leave your comments below. Thanks for reading and stay tuned!

Written by Laura Ellis